How an AI copilot helps qa teams automate test creation (ai, qa, copilot, test creation)

AI helps accelerate TEST CREATION by turning requirements, code and user flows into usable test drafts. First, a copilot reads user stories and code diffs. Next, it proposes test case outlines, unit tests and UI steps. For context, Gartner forecasts rapid uptake of code assistants for engineers; by 2028 three‑quarters of enterprise software engineers will use AI code assistants zgodnie z raportami branżowymi. Also, teams that adopt a copilot often shorten the time from story to automated test.

For example, AI can draft unit tests similar to Diffblue, create UI flows like Testim or Mabl, and propose visual assertions akin to Applitools. In addition, natural‑language tests that read like acceptance criteria can be produced, similar to Functionize. These outputs range from short test case snippets to full test scripts. For unit testing, use an AI copilot to generate JUnit or Playwright examples and then refine them. For UI work, ask the copilot to export steps into a testing framework or into Playwright code. This removes repetitive scripting work.

Measure impact with simple KPIs. Track time to first automated test and the percentage increase in test coverage per sprint. Also track the number of test scenario drafts created per user story. A quick action step is to run a two‑week pilot that feeds three to six user stories into an AI copilot, and then compare manual versus AI test yield. This pilot can show coverage gains, reveal gaps in the copilot’s context handling, and reveal how easily teams can map tests into the CI pipeline.

Practically, integrate the copilot suggestions into a PR workflow. Let the copilot propose test files on the feature branch. Then have a QA engineer or tester review the proposed tests. This reduces time spent on boilerplate. Finally, teams understand that an AI copilot speeds authoring without replacing human judgment. For further reading on automating operational messages and similar workflows, see our resource on how to scale logistics operations with AI agents jak skalować operacje logistyczne przy użyciu agentów AI.

Use ai testing tools to automate qa process and reduce test maintenance (ai testing tools, automate, qa process, automated test, self‑healing)

AI testing tools deliver self‑healing locators and element‑identification features that dramatically cut flakiness. For instance, tools such as Testim and Mabl adapt selectors when the DOM changes. As a result, teams spend fewer hours fixing brittle test scripts. Also, these tools can annotate visual diffs, helping visual regression checks stay accurate. Use an automated test runner that supports self‑healing so the testing pipeline stays reliable.

However, AI does not remove the need for guardrails. Review auto‑updated tests before release and keep a human‑in‑the‑loop. A recent whitepaper states, „AI can support many aspects of QA, but it also introduces critical risks that demand careful attention” jak wskazano w analizie branżowej. Therefore, maintain approval gates and integrate change logs. Also, use telemetry to detect when self‑healing may have shifted test intent.

To implement this, integrate the testing tool into CI so updates run automatically on PRs. Then set a rule: self‑healed changes must be reviewed within the release window. Track reduction in flaky failures and maintenance hours per sprint as success metrics. Use dashboards to expose trends and catch regressions early. Teams can also automate rollback of auto‑changes if the tester flags regressions.

In practice, combine self‑healing with lightweight governance. Keep anonymised historical test runs for model training. Connect the tool to your test management system so approvals and comments stay linked. This makes it easier to audit who accepted AI changes. For teams managing heavy email workflows or ticketed incidents, our platform shows how to ground AI actions in operational data and rules; see our guide on automating logistics emails with Google Workspace automatyzacja maili logistycznych z Google Workspace i virtualworkforce.ai. Finally, accept that AI testing tools cut maintenance, but only with checks and balances in place.

Drowning in emails? Here’s your way out

Save hours every day as AI Agents draft emails directly in Outlook or Gmail, giving your team more time to focus on high-value work.

Prioritise testing with ai in qa: defect prediction, test selection and feedback loops (ai in qa, qa teams, feedback loops, metric, defect prediction)

AI in QA can predict where defects are most likely to appear. Using commit history, telemetry and past defects, ML models rank high‑risk modules. Consequently, QA teams can run focused suites on those areas rather than everywhere. A targeted approach reduces escaped defects and speeds releases. For example, run a smoke and targeted regression on a module flagged high risk, and run full regression only as needed.

Studies show AI‑assisted defect prediction boosts detection rates and lets teams focus scarce testing effort where it matters. One industry report highlights productivity gains from AI‑assisted engineering, while noting that quality improvements vary by team and setup wyjaśnia raport. Therefore, treat model outputs as guides, not absolutes.

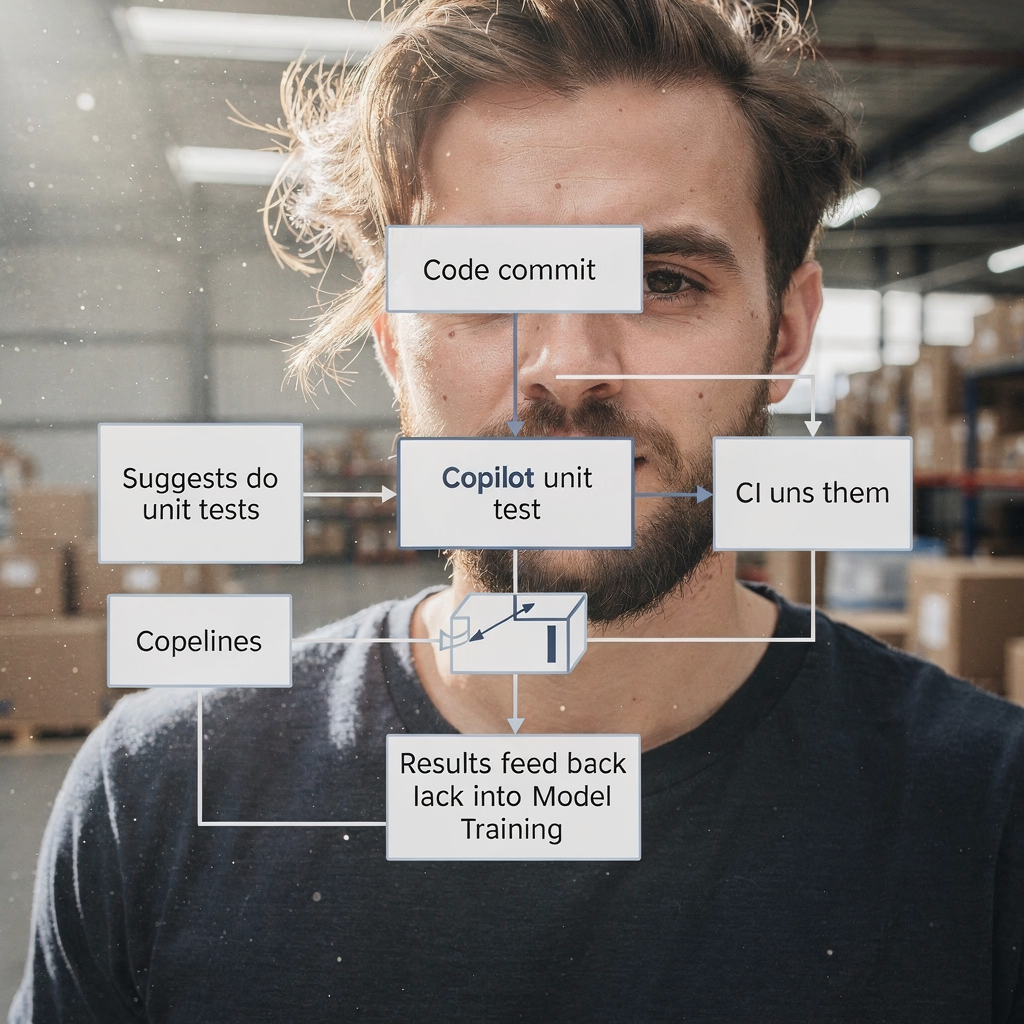

Set up continuous FEEDBACK LOOPS from production incidents back into model training. Feed anonymised telemetry and incident tags into the training set. Then retrain periodically to keep risk prediction aligned with recent changes. Track escaped defects in production, false negative rate, and the percentage of tests skipped via risk‑based selection. Use these measures to tune thresholds and to decide when to expand the targeted suite.

Also, involve QA engineers and qa teams when tuning models. A qa engineer should validate model suggestions and label false positives. This collaboration improves the AI models. Teams improve their ability to triage issues faster, and teams understand patterns that previously hid in noise. For organizations managing operational email and process automations, the same feedback loop ideas help fix false classifications; see our explanation on how virtualworkforce.ai reduces handling time and closes feedback cycles virtualworkforce.ai ROI i feedback.

Integrate ai tools with existing test automation and test management (integrate ai, testing tool, qa tools, framework, test automation)

To integrate AI into an existing stack, adopt pragmatic patterns. First, add an AI copilot as a developer or QA assistant in the repository. Second, connect the testing platform to your test management system and CI/CD. Third, map AI outputs to existing testing frameworks like Selenium, Playwright or JUnit. For example, let the copilot open PRs that add Playwright tests and link them to the corresponding ticket ID.

Checklist items for integration include access to the codebase, anonymised historical test runs, telemetry and tag mapping between your test management and AI outputs. This metadata allows the AI to recommend relevant test scenarios. Also, maintain traceability: each AI‑generated test case should point back to the requirement and the PR that introduced it. This improves auditability and reduces duplicate tests.

Quick wins include enabling the copilot to propose tests as part of pull‑request checks. For example, when a PR changes a payment flow, have the AI suggest related test scenarios and create tests for functional testing and regression testing. Then, reviewers can accept or refine the generated test scripts. This keeps the workflow fast while preserving quality. In addition, integrate the testing tool with the dashboard so stakeholders see coverage and failures in one place.

Practically, use a lightweight governance model. Maintain a test automation backlog where proposed AI changes land. Then assign a tester to validate. Doing so avoids unchecked drift in the suite. Also, ensure your testing frameworks are compatible; for instance, modern Playwright setups accept generated code easily. Finally, when you integrate ai tools, check for security, data access policies, and compliance. If you want to see how AI agents can be grounded in operational data, review our article on scaling logistics operations without hiring jak skalować operacje logistyczne bez zatrudniania.

Drowning in emails? Here’s your way out

Save hours every day as AI Agents draft emails directly in Outlook or Gmail, giving your team more time to focus on high-value work.

Use cases and QA solutions: who benefits and where to apply ai qa (use cases, qa solutions, software qa, quality engineering, tester)

AI benefits many use cases across the QA lifecycle. For legacy codebases, AI can generate unit tests to increase coverage quickly. For regression suites, AI helps keep UI and API tests current. For exploratory testing, AI augments testers by proposing edge cases or unusual input sequences. For visual regression, AI helps detect subtle layout regressions. These are concrete QA solutions that teams can deploy.

Target audiences include qa teams, QA engineers and testers. Testers shift from routine scripting to scenario design and exploratory quality engineering. In practice, the role of the tester becomes more strategic. Test automation becomes a collaboration between human judgment and AI proposals. As a result, teams can focus on improving test scenarios and root‑cause analysis.

Measurable benefits include time saved per release, test coverage gain and faster root‑cause analysis. For a pilot, pick a critical product area such as a payment flow. Apply AI to create automated tests for unit, API and UI layers. Then measure before and after outcomes: time to create tests, escaped defects, and regression test execution time. This focused trial provides clear ROI and learning.

Also, consider using ai-powered test picks for nightly runs to reduce costs. Note that teams must still evaluate models and watch for bias. Use cases include software qa for financial systems, e‑commerce checkout flows and B2B integrations. These are areas where accuracy matters and where AI can meaningfully reduce repetitive work. Finally, the future of qa will include more AI assistance, but the human role in setting intent and validating outcomes will remain essential.

Governance, limitations and selecting top ai-powered qa tools (ai-powered, ai tools, top ai-powered, chatgpt, machine learning, governance)

AI introduces both power and risks, so governance matters. Limitations include model bias, potential over‑reliance and the need to maintain ML models over time. A whitepaper warned that organizations must address ethical and operational concerns when adopting generative AI in QA read the analysis. Therefore, implement human review steps, data governance and traceability.

When selecting ai-powered tools, evaluate model accuracy, integration depth with CI/CD and test management, self‑healing quality and explainability. Also check security and compliance. Create a one‑page procurement rubric that scores vendors for integration, maintenance overhead and expected ROI. For instance, candidates to evaluate include Copilot/GitHub Copilot, Testim, Mabl, Diffblue, Functionize and Applitools. Score them on how well they map into your frameworks, such as Playwright or JUnit, and how they help maintain automated tests.

Also, require vendors to show how they handle data and how models retrain. Ask for a 90‑day adoption plan with success metrics like coverage gain, test velocity and escaped defects reduction. Additionally, include a pilot that uses an AI test in a controlled environment. During the pilot, involve QA teams, development teams and security reviewers. This cross‑functional review avoids surprises and ensures the tool allows teams to keep control.

Finally, be mindful of tools like chatgpt for ideation and code snippets, but separate these from production systems. For production‑grade automation, prefer dedicated ai testing tools that link to your test management and CI. Keep a continuous feedback loop so production incidents refine model training. This governance approach ensures that AI becomes a robust part of your quality management efforts while minimizing risk.

FAQ

What is an AI copilot for QA?

An AI copilot for QA is an assistant that proposes test cases, generates code snippets and suggests test scripts based on requirements, code and telemetry. It speeds up authoring, but a human reviewer should validate outputs before release.

How quickly can teams see value from an AI pilot?

Teams often see initial value within two weeks of a focused pilot that feeds a few user stories into the copilot. This reveals time savings, draft test yield and coverage improvements.

Do AI testing tools remove flaky tests automatically?

AI testing tools can reduce flakiness by using self‑healing locators and smarter element identification. However, teams must review auto‑changes and maintain guardrails to prevent drift.

How do I prioritise tests with AI?

Use ML‑based defect prediction that ranks modules by risk using commit history and telemetry. Then run targeted suites on high‑risk areas and feed incidents back into training for continuous improvement.

Can AI generate unit tests for legacy code?

Yes, AI can generate unit tests that increase coverage for legacy code. Teams should review generated tests and integrate them into the CI pipeline to ensure stability.

What governance is needed for AI in QA?

Governance requires human review, data access controls, audit logs and retraining policies for AI models. These elements reduce bias, ensure traceability and maintain quality over time.

Which tools should I evaluate first?

Start with vendors that integrate with your CI and test management. Consider Copilot/GitHub Copilot for snippets, and evaluate Testim, Mabl, Diffblue, Functionize and Applitools for fuller automation.

How do AI and traditional QA work together?

AI augments traditional QA by taking on repetitive tasks, proposing test scenarios and keeping suites updated. Human testers focus on exploratory testing, validation and scenario design.

Is chatgpt useful for test generation?

ChatGPT can ideate and draft test scenarios, but production tests should come from tools that link directly to CI and test management for traceability and reproducibility.

How should I measure success for an AI QA rollout?

Measure coverage gain, test velocity, reduction in maintenance hours, and escaped defects in production. Use these metrics to iterate on tooling and governance plans.

Ready to revolutionize your workplace?

Achieve more with your existing team with Virtual Workforce.